OpenAPI Support¶

MLServer follows the Open Inference Protocol (previously known as the “V2 Protocol”). You can find the full OpenAPI spec for the Open Inference Protocol in the links below:

Name |

Description |

OpenAPI Spec |

|---|---|---|

Open Inference Protocol |

Main dataplane for inference, health and metadata |

|

Model Repository Extension |

Extension to the protocol to provide a control plane which lets you load / unload models dynamically |

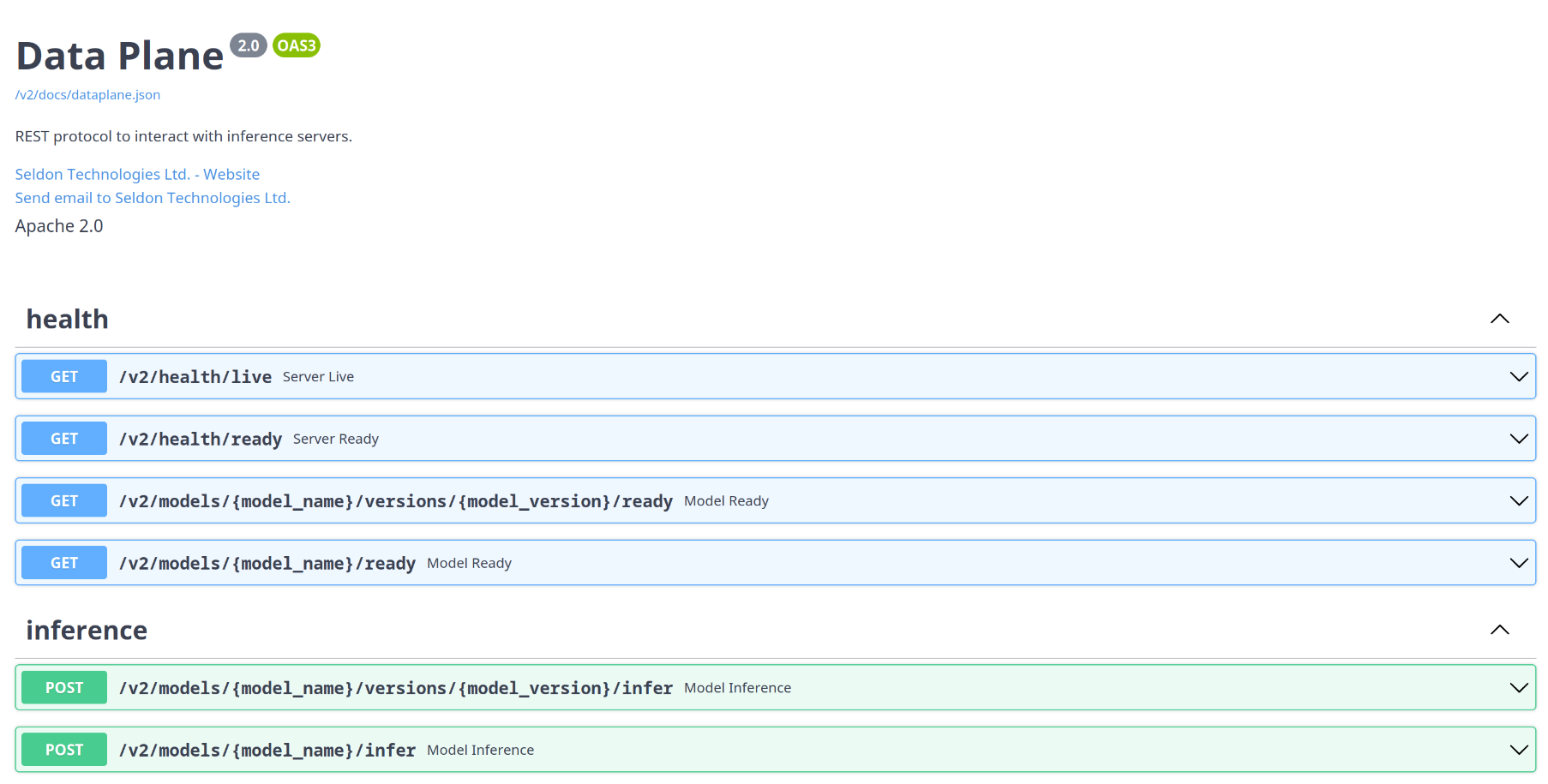

Swagger UI¶

On top of the OpenAPI spec above, MLServer also autogenerates a Swagger UI which can be used to interact dynamycally with the Open Inference Protocol.

The autogenerated Swagger UI can be accessed under the /v2/docs endpoint.

Note

Besides the Swagger UI, you can also access the raw OpenAPI spec through the

/v2/docs/dataplane.json endpoint.

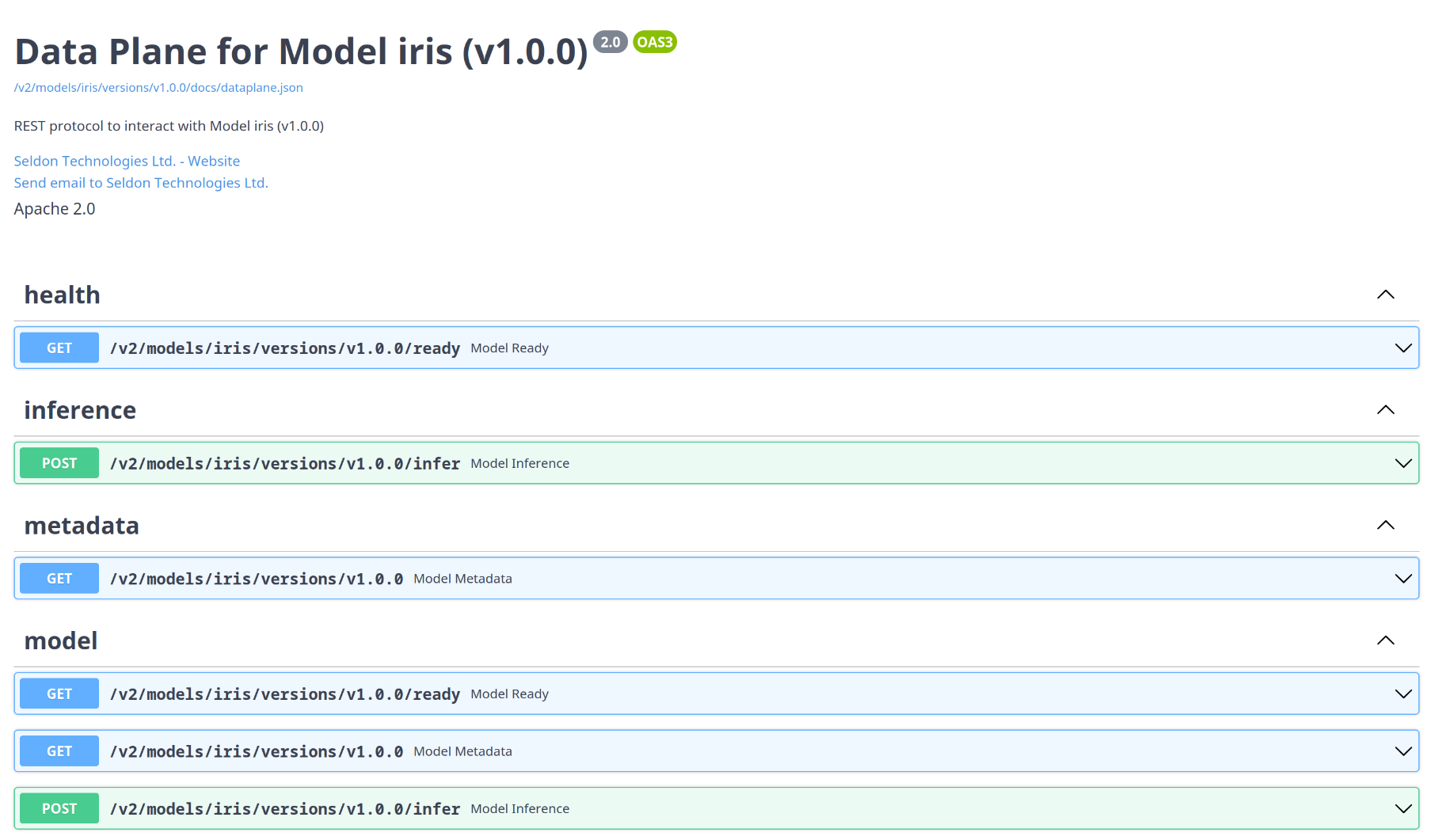

Model Swagger UI¶

Alongside the general API documentation, MLServer will also autogenerate a Swagger UI tailored to individual models, showing the endpoints available for each one.

The model-specific autogenerated Swagger UI can be accessed under the following endpoints:

/v2/models/{model_name}/docs/v2/models/{model_name}/versions/{model_version}/docs

Note

Besides the Swagger UI, you can also access the model-specific raw OpenAPI spec through the following endpoints:

/v2/models/{model_name}/docs/dataplane.json/v2/models/{model_name}/versions/{model_version}/docs/dataplane.json